superhuman benchmark

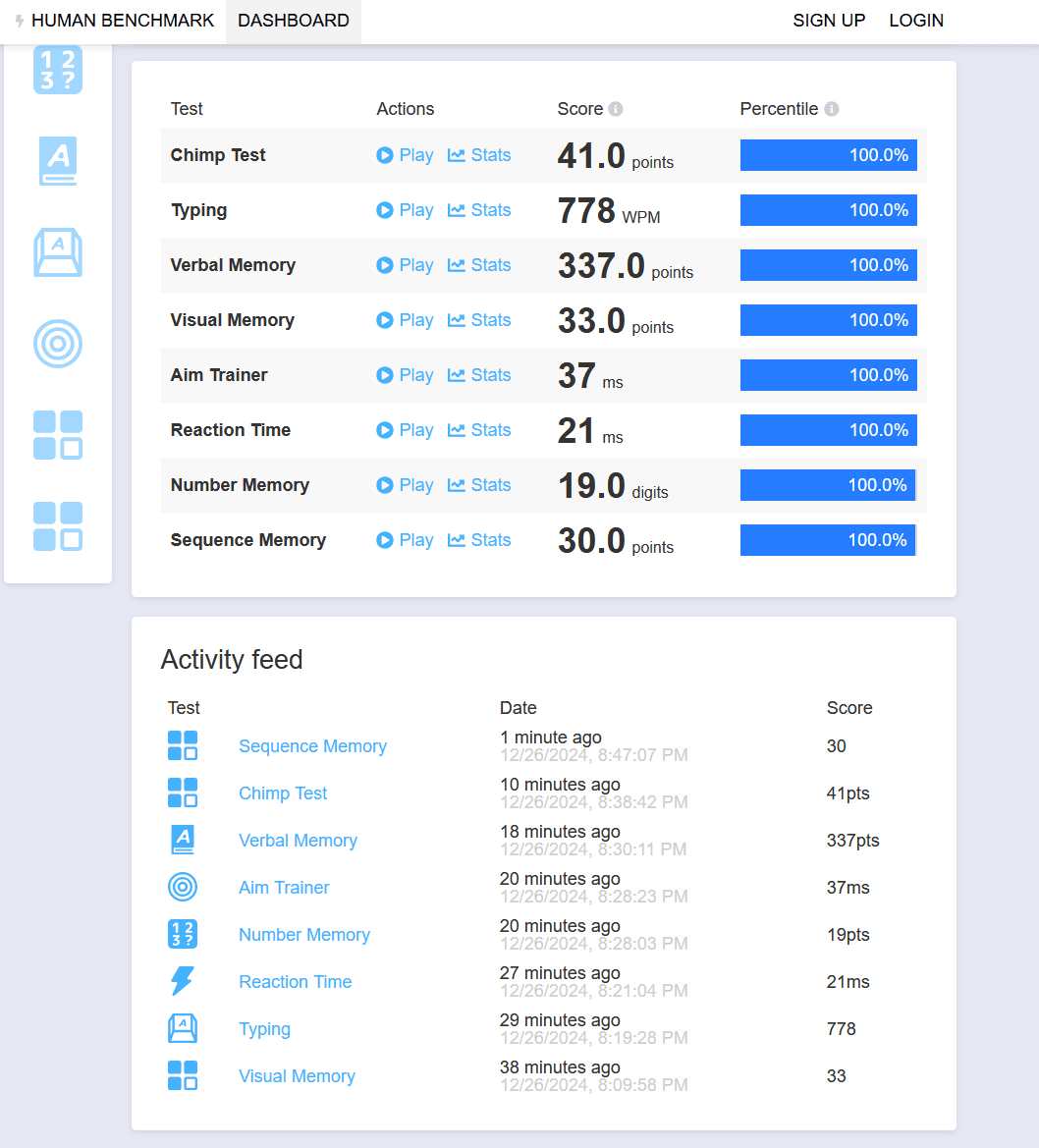

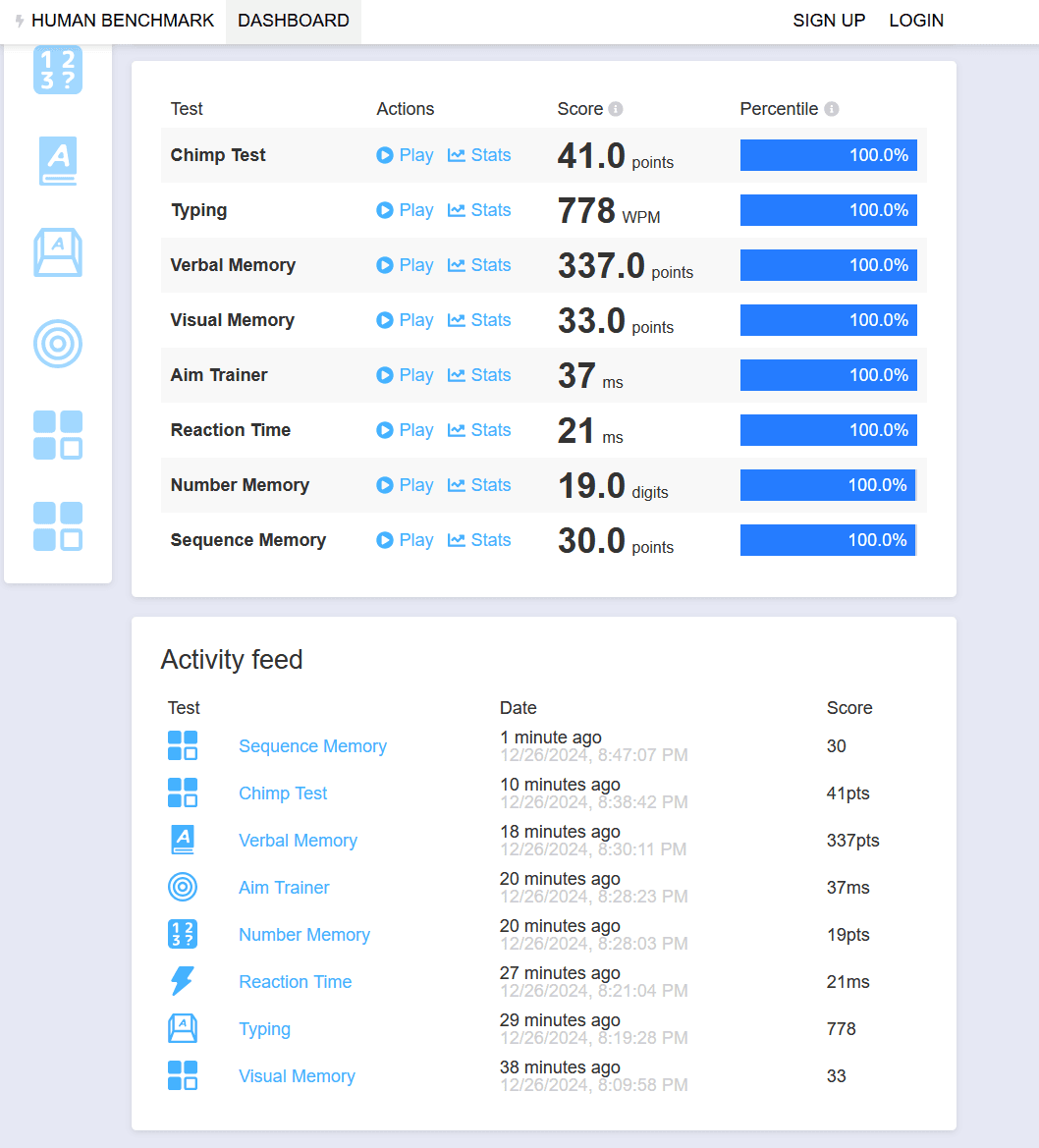

Superhuman benchmark is a Python script with a command line interface designed to solve all tests available on Human Benchmark with 100% accuracy, demonstrating automation capabilities through natural browser interactions.

Description

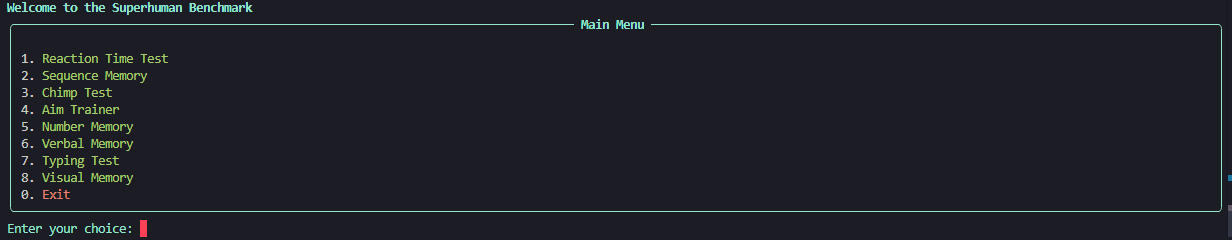

This project is a collection of Python scripts designed to solve all tests available on Human Benchmark with 100% accuracy. Each option of the menu corresponds to a specific test on the platform and is optimized to achieve the highest possible score.

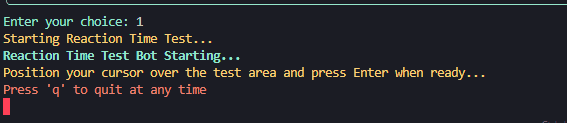

The project includes scripts for various cognitive tests including verbal memory, number memory, typing tests, visual memory, reaction time, aim training, chimp test, and sequence memory. All scripts interact with the webpage through mouse movements, clicks, and keyboard inputs - the same way a human would solve these tests, without modifying any webpage or game code.

A main menu script allows users to easily select and run any of the tests, making it simple to demonstrate the automation capabilities across different cognitive challenges.

This proyect was inspired by this video In which code bullet solves the aim training test. Felt that it would be cool to solve all of them with 100% accuracy and not hardcoding them to a px position like he does, instead taking a relative position after clicking on the start test button.

Overall a fun weekend project that kinda came out of nowhere and I'm proud of the result.

The project includes scripts for various cognitive tests including verbal memory, number memory, typing tests, visual memory, reaction time, aim training, chimp test, and sequence memory. All scripts interact with the webpage through mouse movements, clicks, and keyboard inputs - the same way a human would solve these tests, without modifying any webpage or game code.

A main menu script allows users to easily select and run any of the tests, making it simple to demonstrate the automation capabilities across different cognitive challenges.

This proyect was inspired by this video In which code bullet solves the aim training test. Felt that it would be cool to solve all of them with 100% accuracy and not hardcoding them to a px position like he does, instead taking a relative position after clicking on the start test button.

Overall a fun weekend project that kinda came out of nowhere and I'm proud of the result.

Technologies

The project is built using Python with PyAutoGUI as the main automation library. Despite not having used Python in quite a while, PyAutoGUI immediately stood out as the perfect tool - its simple yet powerful API made it easy to get back into Python development while providing all the mouse and keyboard control functionality I needed.

For text recognition, I used Tesseract OCR through the pytesseract wrapper. Even though I hadn't worked with OCR before, the Python ecosystem made it straightforward to integrate for reading numbers and words from the screen during tests.

PyAutoGUI's screenshot capabilities combined with basic image processing were perfect for identifying clickable elements and tracking visual changes. The library's fail-safes also helped prevent any runaway automation scripts by detecting mouse movement to corners - a feature I really appreciated while getting reacquainted with automation tools.

For text recognition, I used Tesseract OCR through the pytesseract wrapper. Even though I hadn't worked with OCR before, the Python ecosystem made it straightforward to integrate for reading numbers and words from the screen during tests.

PyAutoGUI's screenshot capabilities combined with basic image processing were perfect for identifying clickable elements and tracking visual changes. The library's fail-safes also helped prevent any runaway automation scripts by detecting mouse movement to corners - a feature I really appreciated while getting reacquainted with automation tools.

Things Learned

This project provided valuable insights into browser automation and image processing. I learned how to effectively combine different automation techniques to create robust solutions that can handle various types of cognitive tests.

Understanding how to process screen information in real-time and optimizing for speed was particularly challenging and educational since the tests are timed and the user has a limited amount of time to solve them.

Additionally, I gained experience in creating user-friendly CLI tools which I always had in the back of my mind but never got the chance to implement.

Some scripts are not flawless but work most of the time which is good enough for the purpose of this project.

Understanding how to process screen information in real-time and optimizing for speed was particularly challenging and educational since the tests are timed and the user has a limited amount of time to solve them.

Additionally, I gained experience in creating user-friendly CLI tools which I always had in the back of my mind but never got the chance to implement.

Some scripts are not flawless but work most of the time which is good enough for the purpose of this project.

Things I Would Do Differently

While the project successfully achieves its goals, there are several areas where I would make improvements if starting over, for example, I would implement better error handling and recovery mechanisms for cases where the website layout changes or network issues occur. Additionally, creating a more sophisticated logging system would help in debugging and performance optimization.

I would also consider "training" tesseract on the particular font used in the tests, since the number memory test sometimes fails to read the numbers.

Also making it easier to close, pause and resume the script from arbitrary points.

I would also consider "training" tesseract on the particular font used in the tests, since the number memory test sometimes fails to read the numbers.

Also making it easier to close, pause and resume the script from arbitrary points.

Gallery